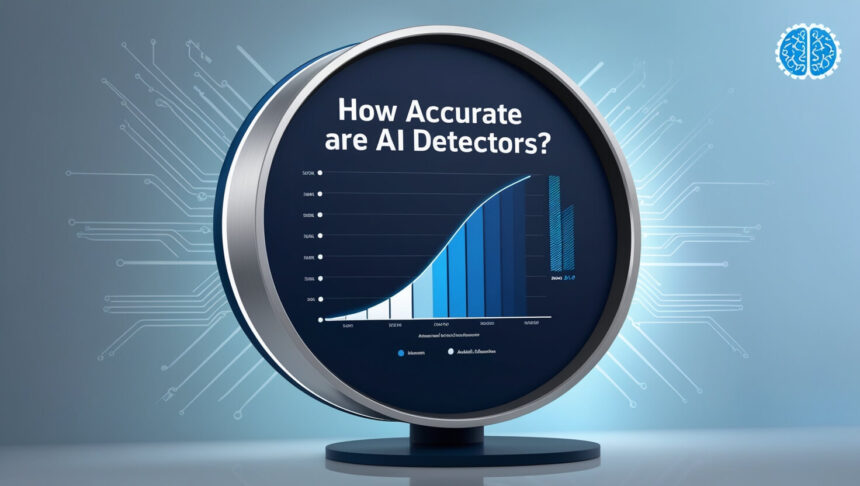

In this article, I will discuss the How Accurate Are AI Detectors spot text written by machines. Because artificial intelligence is improving fast, finding that text gets harder every day.

These detectors scan wording in different ways , yet their results can be pretty hit or miss . Knowing where the tools shine-and where they flop-lets people trust them smartly and still lean on human review.

What Are AI Detectors?

AI detectors are handy tools that spot whether a piece of writing came from a computer program instead of a real person.

They look at word patterns, sentence rhythm, and other language quirks to tell the two apart. Schools, magazines, and social-media platforms lean on these detectors to keep things honest and stop dishonest use of automated text.

Powered by machine-learning models and number-crunching stats, the systems aim to raise a red flag on AI copy, but their hit-or-miss results depend on the tech and the situation.

How Accurate Are AI Detectors

Accuracy Varies by Tool

Each detector scores differently, usually landing between 70% and 90%.

Depends on AI Model

Cutting-edge language models sound so human that even top tools struggle.

False Positives

Clean, human text can get wrongly tagged as machine-made.

False Negatives

Tweaked robot text may slide past a detector unseen.

Training Data Quality

Systems fed broad, varied examples spot fakes more often.

Text Length & Complexity

Long, dense articles give detectors more clues to chew on.

Evolving AI Technology

As writers improve, detectors have to sprint just to keep up.

Human Judgment Needed

Machine scores are best when a real person double-checks.

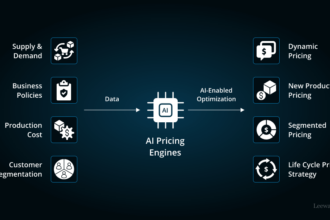

How Do AI Detectors Work?

Data Training: First, the tool studies a giant pile of text that mixes human work with AI samples.

Feature Analysis: Next, it looks at word choice, grammar, rhythm, and other small clues in the language.

Pattern Recognition: From that watch, the detector spots habits or oddities that lean toward machine writing.

Machine Learning Models: Powerful algorithms, often neural networks, flag the subtle gaps human authors rarely leave.

Probability Scoring: Afterward, a score appears, showing the chances that the passage came from an AI.

Threshold Setting: That score is measured against a preset line to see if the piece gets marked.

Continuous Updating: The system learns again and again so it can chase newer, smarter AIs.

Output Report: Finally, users get a clear reading with confidence numbers or simple yes/no flags.

Factors Affecting AI Detector Accuracy

Quality of Training Data: The larger and more varied the samples, the sharper the detectors become.

Sophistication of AI Models: Cutting-edge generators craft text so human-like that spotting fakes grows tough.

Text Length: Longer pieces offer more clues, letting detectors build a fuller picture.

Text Complexity: Plain, formula-driven writing stands out quickly, while layered, creative prose blends in.

Editing and Paraphrasing: Human tweaks and rewording can hide the telltale signs left by machines.

Detector Algorithm: Whether it runs on deep-learning neurons or hard-coded rules shapes how it judges work.

Threshold Sensitivity: Tight rules flag almost everything; loose ones miss clear fakes- finding the sweet spot is tricky.

Contextual Understanding: Without broader context, detectors can read a statement one way and guess wrong.

Common Limitations and Challenges

False Positives

Detectors sometimes label a human draft as computer-made.

False Negatives

Well-edited AI copy can slip through unnoticed.

Evolving AI Models

Speedy upgrades to chatbot software outrun the programs watching it.

Limited Context Understanding

A detector usually scans syntax, not the writers purpose.

Short Texts

Tiny paragraphs lack enough data for solid judgment.

Adversarial Manipulation

Savvy authors tweak punctuation and style to dodge the net.

Bias in Training Data

If the training set is uneven, results become unfair.

Over-reliance Risks

Blind faith in the tech can birth wrong claims and missed fakes.

Ethical and Practical Implications

Privacy worries: Running scans on personal notes or emails raises clear consent and data-protection questions.

Abuse danger: Arts or reports wrongly flagged as bot-made can damage a persons name and career.

Academic honesty: Detectors support fairness in school work but sometimes punish natural, unique voices.

Tech crutch: Leaning too heavily on software can dull lets-human judgment and careful review.

Bias and fairness: Tools trained on narrow samples may flag certain styles, dialects, or groups unfairly.

Clear rules: Users deserve plain explanations of how scores are calculated before trusting the results.

Legal fallout: Wrong labels might spark disputes over copyright, credit, or ownership rights.

Mixing machines and eyes: The best protection blends detector results with smart human checking for fair outcomes.

Future Outlook: Improving AI Detection Reliability

Smarter Algorithms: Build sharper machine-learning programs that tell human text from AI text.

Teamwork Tests: Let software work side by side with human experts for checks and balances.

Never-ending Training: Feed detectors fresh AI samples every month so they never fall behind.

Context Sense: Teach systems to read meaning, not just words, and slash false alarms.

Shared Know-How: Swap detection tips between websites and apps so everyone benefits.

Clearer Answers: Make detectors explain their choices in simple language that users can trust.

Tough on Tricks: Add shields that stop hackers from hiding AI files with sneaky edits.

Fair Rules: Write and follow ethical guides so detection tools are fair and respect privacy.

Pros & Cons

| Pros of AI Detectors | Cons of AI Detectors |

|---|---|

| Help maintain content authenticity and integrity | Can produce false positives, flagging human writing incorrectly |

| Useful in education to prevent plagiarism | May miss sophisticated AI-generated text (false negatives) |

| Automate large-scale content monitoring | Accuracy decreases as AI writing becomes more advanced |

| Save time compared to manual review | Dependence may reduce critical human judgment |

| Aid in content moderation and compliance | Privacy concerns when scanning sensitive data |

| Continuously improving with new AI data | Biases in training data can affect fairness |

| Provide quick feedback with probability scores | Limited understanding of context and nuance |

| Encourage ethical AI use and transparency | Risk of misuse or wrongful accusations |

Conclusion

In short, AI detectors can be handy for spotting computer-written text, and most tests report accuracy between 70% and 90%.

Yet that number dips or climbs based on how advanced the original AI is, how good the training data was, and how tricky the language itself turns out to be. Because both the tools and the bots keep improving, the safest plan is to let an alert person double-check the results whenever possible.